Dossiers are the basis for bringing a drug to the market and regulating it. However, the amount of documents that compose them makes it difficult to quickly identify the necessary information, hindering the company's ability to respond quickly to the requests of the supervisory bodies. One of our clients asked us to help him solve the problem with a tool to recover the information stored in thousands of files, without having to discard them one by one. Here's how it went.

Our client, an international pharmaceutical group, had great difficulty retrieving the information contained in the dossiers, that is, the files that collect thousands of technical, clinical and regulatory documents essential to obtain commercial authorizations for a drug and ensure its correct regulation.

The problem therefore consisted in quickly finding the data necessary to satisfy the requests of regulatory bodies.

Each question involved searching for specific references within the dossiers; an operation that forced users to manually browse tens of thousands of files, even when starting from an initial search in Veeva CRM.

The system allows only a traditional search, based on the use of keywords.

It is useful for locating documents potentially relevant, but not sufficient to immediately find the information of interest.

As a result, users had to open the files identified by the system one by one, scroll through the pages and find the requested information on their own.

The result was a long and expensive process, subject to frequent errors, which jeopardized the company's ability to:

To reduce the numerous inefficiencies of this process, we have developed a solution that involves the use of a chat with RAG technology, integrated directly into the corporate CRM based on Veeva.

The operation is simple, yet sophisticated.

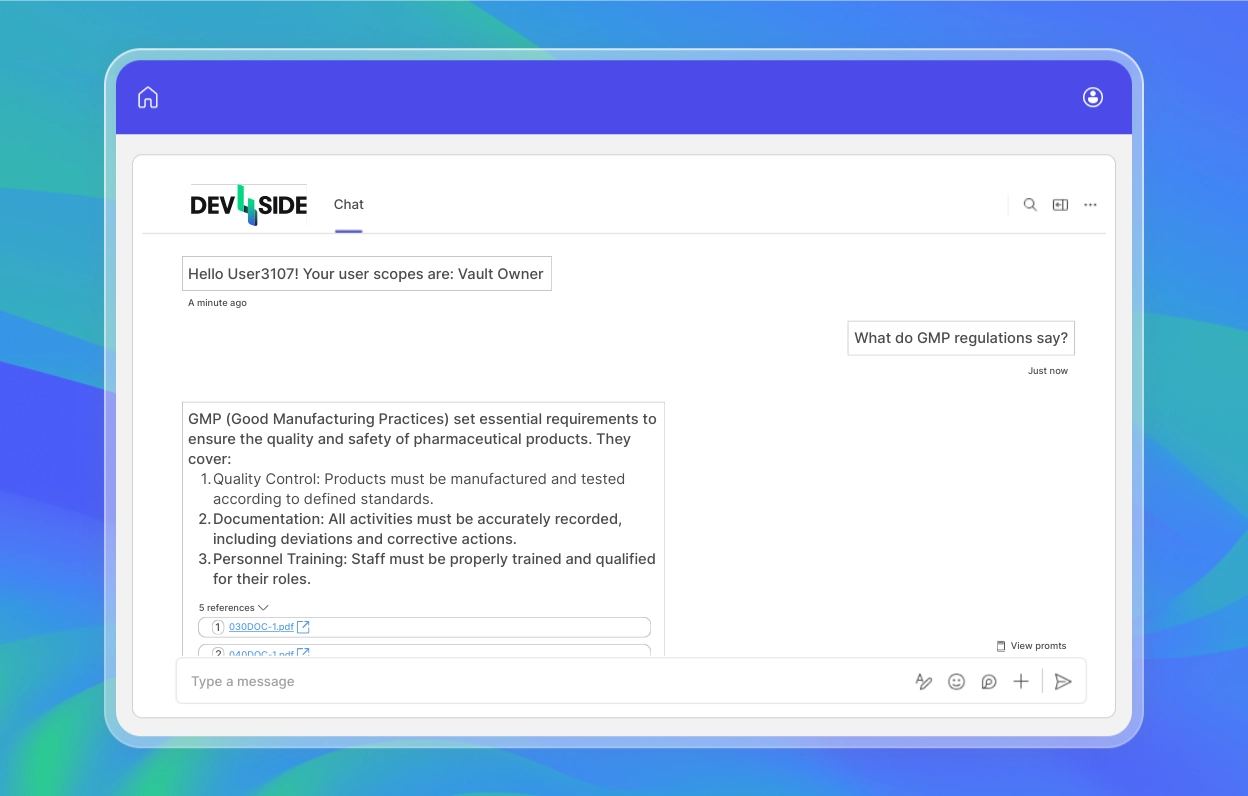

Users can ask the chatbot questions as if they were talking to a colleague, through a conversational chat. The artificial intelligence processes the request, connects to Veeva Vault (the company's document repository) through Veeva CRM and generates its response.

Unlike traditional research, which returns a list of documents, the chatbot provides the requested information, already extracted from company files and enriched by links to the original document, so that the user can verify or deepen each response.

Behind the scenes, our solution exploits the capabilities of Azure AI Search to index dossiers, transforming their contents into “digestible” data by the chatbot.

When a question arrives, it is in fact compared with the index and the generative model receives the most relevant passages as context. In this way, the chatbot is able to return a precise response in a matter of seconds, based on the data in the company's knowledge base.

Plus, the entire process takes place without leaving the Veeva CRM environment.

The heart of our solution is undoubtedly the Retrieval-Augmented Generation, and not everyone might know what we're talking about.

The Retrieval-Augmented Generation is an architecture that combines large language models with intelligent systems specialized in data retrieval, to provide more precise and contextualized answers, based directly on a company's internal knowledge base.

It thus overcomes the limitations of traditional LLMs, such as ChatGPT, which are based on large sets of generic data, not controlled or constantly updated, and which often fail to provide relevant answers to specific needs, such as those of workers.

Common generative artificial intelligence has in fact already proven to be useful, but in the business environment it still encounters three important obstacles:

This is where RAG comes into play.

Instead of merely generating responses based on model memory, the RAG integrates a semantic search engine capable of retrieving the most relevant content from business documents in real time.

With an approach of this type, a solution like our chatbot does not “invent” the answer, but returns exactly the information that the user needs, simplifying their work.

To learn more about this technology, check out our project ”Retrieval-Augmented Generation, with Azure OpenAI”.

The solution was immediately adopted by all staff.

Not only thanks to the template created by our team, which allows us to accelerate the development of tailor-made RAG solutions, but also because of the simplicity of the conversational interface and the direct integration with Veeva CRM, already known to workers.

From the first days of use, the customer expressed great satisfaction, especially for the drastic reduction in the time spent searching for information.

An activity that previously required minutes, if not hours, of manual consultation now takes place in a few seconds, with answers based solely on verified documentation.

Needless to underline how the optimization of this single process has led to:

The Modern Apps team responds swiftly to IT needs where software development is the core component, including solutions that integrate artificial intelligence. The technical staff is trained specifically in delivering software projects based on Microsoft technology stacks and has expertise in managing both agile and long-term projects.