The Retrieval Augmented Generation is a framework that enhances the capabilities of large language models (LLM) by integrating an information retrieval system. This system retrieves relevant data from a specified corpus to support the responses generated by the model, making them more accurate and contextually relevant. The Azure OpenAI service allows developers to implement RAG by taking advantage of the robust infrastructure and artificial intelligence capabilities offered by Azure. In this article, we will see how Microsoft's cloud platform makes it possible to implement RAG in business AI solutions.

As we enter the world of generative artificial intelligence, we are at the forefront of a technological revolution.

These advanced systems are able to create texts, images and even code, overcoming the boundaries of what we thought was possible in the interaction between man and machine.

From amplifying creative processes to solving complex problems, generative AI is transforming industrial sectors and opening new avenues for innovation. However, this transformation is not without complications, especially in the business environment.

What kind of complications?

To begin with, generalist models are trained on public data and don't know the policies, processes, or language in use in their company.

In addition, every time an internal user of an organization sends a request to an external tool, they risk sharing sensitive data with a system that does not offer great guarantees of privacy: a risk that no one can afford.

Not to mention the fact that many of the biggest models currently on the market are trained on static data sets that need to be constantly retrained through long and frequent fine-tuning operations.

If you don't, it's worth getting outdated answers from your model that can end up sowing confusion and misinformation in the work environment.

In short, a good deal of concern.

Things, however, have changed with the Retrieval Augmented Generation (or RAG) within AI models.

Its implementation, in combination with the development environments provided by Azure OpenAI, can seriously make a difference for a business that wants to take advantage of the latest findings in the field of AI to strengthen and optimize its digital infrastructure.

We'll look at it better in the next few sections, but first a brief review.

Azure OpenAI is the result of the combination of the infrastructure of Microsoft's cloud computing platform and the integration with the advanced artificial intelligence models developed by OpenAI.

A combo that allows organizations to access and exploit cutting-edge AI capabilities, such as natural language understanding and automatic content generation within Azure cloud environments.

The service provides users with access to AI models, including the well-known GPT-4 (Generative Pre-trained Transformer 4) language model, and other cutting-edge technologies developed by OpenAI.

These models are capable of understanding and generating text with a high degree of sophistication, making possible things such as the automatic creation of content, the development of apps for virtual customer support and assisted code writing.

GPT-4, for example, can generate consistent and relevant responses based on minimal inputs, facilitating the creation of advanced chatbots, virtual assistants and text generation tools, but not only. In fact, the model can be used to analyze and synthesize large amounts of text, supporting research and data analysis in any type of sector.

Azure OpenAI is designed to be accessible to both experienced AI professionals and newbies.

The APIs provided are intuitive and easy to use, allowing companies to quickly integrate artificial intelligence capabilities into their existing applications. This reduces (although not completely eliminating) the need for advanced technical skills and accelerates the process of developing and implementing AI-based solutions.

The platform offers a wide range of capabilities, including:

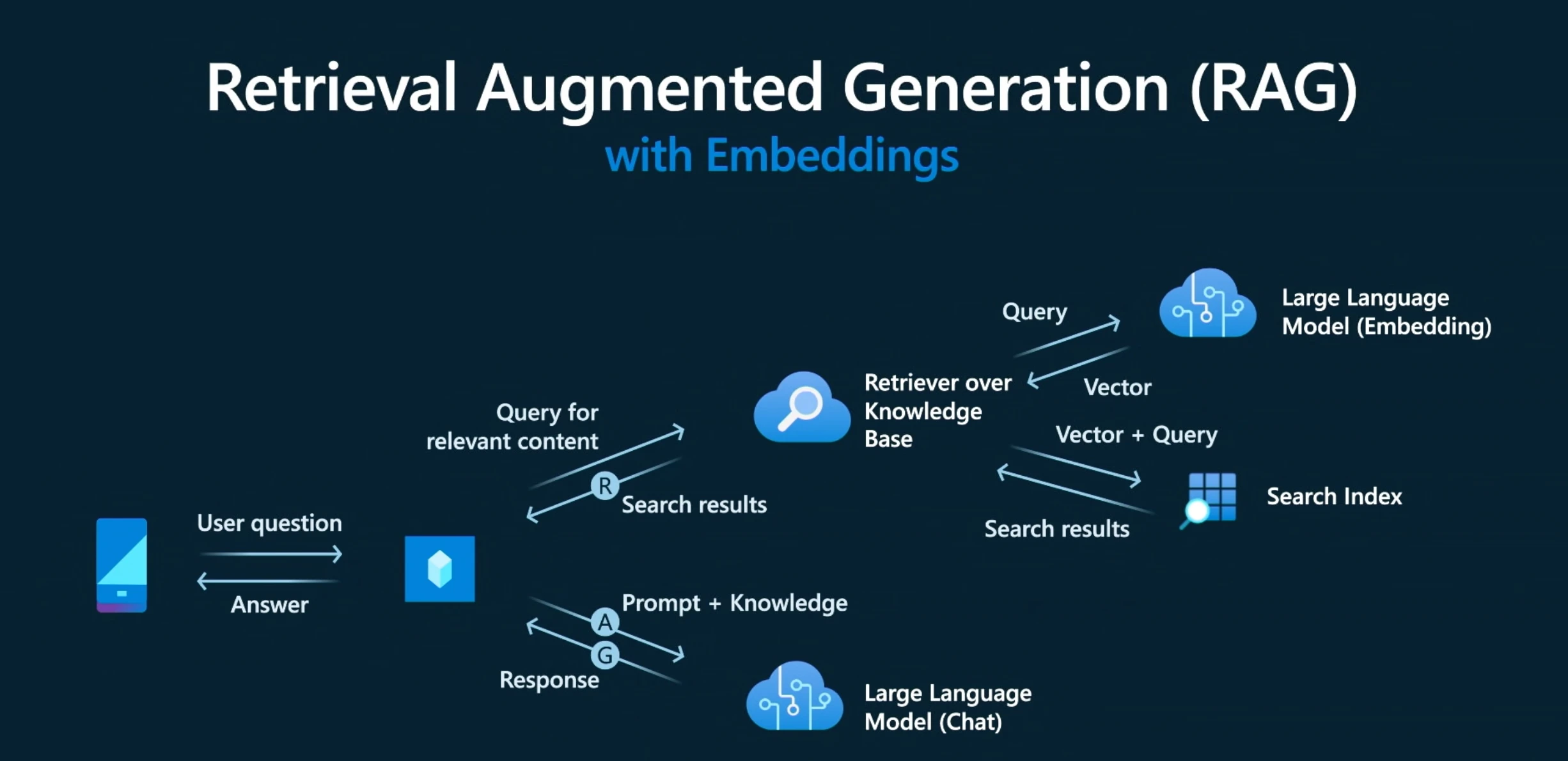

La Retrieval Augmented Generation is a technique that improves the accuracy and reliability of generative artificial intelligence models thanks to information retrieved from specific and relevant data sources. In other words, it fills a gap in the functioning of large language models (LLM).

At their core, LLMs are neural networks, usually evaluated based on the number of parameters they contain. The parameters of an LLM essentially represent the general patterns with which human beings use words to form sentences.

This deep understanding, sometimes called parameterized knowledge, makes LLMs useful in responding to general requests. However, it's not enough for those who need insights into very specific information.

Patrick Lewis, lead author of the 2020 article that coined the term, and his colleagues developed the Retrieval Augmented Generation to link generative AI services to external resources, especially those rich in updated technical details.

The RAG provides models with sources that can be cited, such as footnotes in a scientific article, so that users can verify any statement. This helps build trust.

In addition, the technique can help models clarify any ambiguities present in the user's request. It also reduces the chance that the model will provide a very plausible but incorrect answer, a phenomenon known as hallucination.

Another great advantage of RAG is its relative simplicity.

In a blog, Lewis and three co-authors of the article explain that programmers can implement the process with just five lines of code. This makes the method faster and cheaper than retraining a model with new datasets. And it allows users to dynamically change reference sources.

With the Retrieval Augmented Generation, users can essentially communicate with sets of data, paving the way for new experiences. This means that RAG applications could multiply based on the number of available datasets.

Almost any company can transform its technical or regulatory manuals, videos, or logs into resources called knowledge bases, capable of strengthening LLMs. These sources enable use scenarios such as customer or field support, staff training, and developer productivity.

We develop solutions based on artificial intelligence, with a strong focus on modern technologies for information management. We work on projects that apply Retrieval-Augmented Generation, Machine Learning, and Natural Language Processing to improve productivity, customer experience, and data analysis across all industries.

Our services include:

Rely on our expertise to make your company smarter.

The RAG could represent the keystone in the future of generative artificial intelligence applied to areas that go beyond generalist space and give this technology the necessary impetus to become a cornerstone of every corporate digital infrastructure, at every level and in every sector.

In this section, we will list the main benefits that the capabilities of the RAG framework can bring to our operations with AI.

RAG models rely on external knowledge bases to retrieve relevant and updated information in real time before generating responses. Large language models (LLM) were trained at a specific time and on a defined set of data.

RAG allows responses to be based on current and additional data, rather than relying solely on the model's training dataset.

RAG-based systems are particularly effective when the required data is constantly changing and updated. By integrating real-time data, RAG models expand the scope of what an application can do, including, for example, live customer support, travel planning, or managing insurance practices.

The RAG excels at providing context-rich answers by retrieving data specifically relevant to the user's request. This is achieved through sophisticated retrieval algorithms that identify the most relevant documents or fragments of data from a vast and disparate set of information.

By exploiting contextual information, RAG allows artificial intelligence systems to generate answers tailored to specific user needs and preferences. In addition, RAG allows organizations to maintain data privacy, avoiding having to retrain a third-party ownership model, leaving the data where it resides.

RAG allows a controlled flow of information, precisely adjusting the balance between retrieved data and generated content to maintain consistency while minimizing falsifications.

Many RAG implementations offer transparent attribution of sources, citing the references of the information retrieved and adding a level of accountability (both elements fundamental to ethical AI practices).

This auditability not only increases user confidence, but it also aligns with the regulatory requirements of many sectors, where accountability and traceability are essential. RAG, therefore, strengthens trust and significantly improves the accuracy and reliability of content generated by artificial intelligence.

RAG allows organizations to use existing data and knowledge bases without having to carry out extensive retraining of large language models (LLM). This is achieved by integrating the input to the model with relevant retrieved data, rather than requiring the model to learn from scratch.

An approach that significantly reduces the costs associated with the development and maintenance of artificial intelligence systems.

Organizations can deploy RAG-enabled applications faster and more efficiently, since there is no need to invest significant resources training large models on proprietary data.

RAG helps to increase user productivity by allowing them to quickly access accurate and contextually relevant data, thanks to an effective combination of information retrieval systems and generative artificial intelligence.

This integrated approach simplifies and speeds up the process of collecting and analyzing data, significantly reducing the time needed to identify useful information. As a result, decision makers can focus their attention on concrete and strategic insights, improving the quality and timeliness of decisions.

At the same time, work teams can automate repetitive and time-consuming tasks, freeing up resources to dedicate to activities with greater added value.

In addition, this greater efficiency not only accelerates operational flows, but also favors better collaboration between different departments, since the most up-to-date and relevant information is easily accessible and shareable.

When you decide to implement a RAG system in the company, the platform with which to build it obviously makes the difference and Azure OpenAI turns out to be a valuable choice for anyone who wants to work on their AI solutions on the Microsoft cloud, both for the performance of the integrated language model and for all the advantages offered by the Azure ecosystem.

Which ones?

For example, one of the main limitations that has always influenced the business utility of the public generative AI models we were talking about in the introduction is related to data security. Every time a worker makes use of a tool like ChatGPT, they risk sharing sensitive information with an uncontrolled environment.

Azure OpenAI does not pose this problem, since user requests are processed directly in the customer's Microsoft cloud, without leaving the corporate security perimeter. This involves maximum privacy protection and the guarantee of compliance with regulations.

But security isn't a company's only concern, and you probably want your AI solution to be integrated with the work tools that employees use on a daily basis.

Azure OpenAI allows precisely this, allowing the generative component of a RAG system to be integrated with all the Microsoft tools that populate the digital workplace (such as SharePoint, Teams, OneDrive and Microsoft Viva), as well as with a company's custom applications (whether web, desktop or mobile) to respond to any need, even outside the Microsoft 365 ecosystem.

And, of course, RAG with Azure OpenAI allows developers to use supported artificial intelligence chat models that can refer to specific sources of information to base the response.

Adding this information allows the model to refer both to the specific data provided and to the related knowledge with prior training to provide more effective answers.

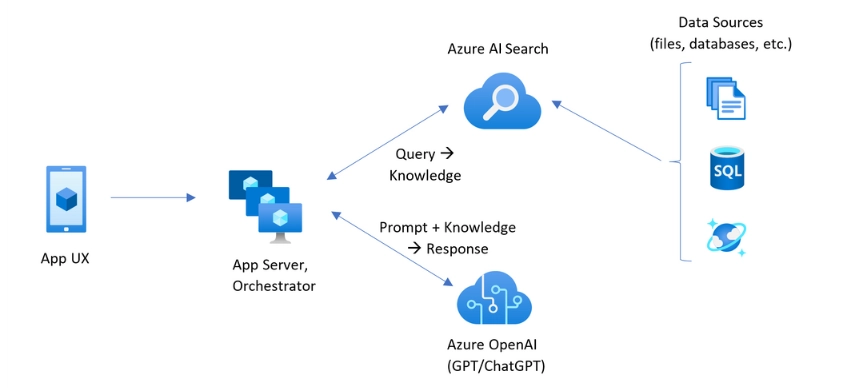

Azure Open AI enables RAG by connecting pre-trained models to their data sources. The data service application takes advantage of Azure AI Search's search ability to add relevant blocks of data to the prompt.

After entering the data in an artificial intelligence search index, Azure OpenAI proceeds on it with the following steps:

Adding data can be done through Azure AI Studio, in the chat playground, or by specifying the data source in an API call. The added data source is then used to increase the request sent to the model.

When configuring data in the studio, you can choose to upload the data files, use the data in a BLOB storage account, or connect to an existing Artificial Intelligence Search index.

If you upload or use files already in a storage account, Azure OpenAI on data supports .md, .txt, .html, .pdf, and Microsoft Word or PowerPoint files. If any of these files contain graphics or images, the quality of the response depends on the quality of the text that can be extracted from the visual content.

When uploading data or connecting to files in a storage account, it is recommended that you use Azure AI Studio to create the search resource and index.

Adding data, in this way, allows the appropriate division into blocks when inserting it into the index, obtaining better answers. If you are using large forms or text files, you must use the available data preparation script to improve the accuracy of the artificial intelligence model.

Enabling semantic search for the artificial intelligence search service may improve the search result in the data index, and you will probably receive higher-quality answers and citations.

However, enabling semantic search may increase the cost of the search service.

You can also use the wizard in the Artificial Intelligence Search resource to vectorize data appropriately. Note that you use the wizard in AI Studio to create and connect the data source, you will need to create a hub and a project.

Considering the combination of more efficient AI models and Microsoft-branded development environments, could our experts avoid experimenting with new solutions to solve customer problems and help them face the digital challenges that AI has brought with it?

Obviously not.

After observing the difficulties of our customers in using generative AI, we thought of an alternative solution using our own RAG technology.

Business users need answers that are relevant to their reality, based on internal documents and verifiable without compromising the security of the shared data and we immediately understood how the missing link between Gen AI and these needs was precisely a RAG system.

Despite the complexity associated with managing sensitive business data and the integration of an AI system in structured environments such as Microsoft 365, we were able to create a RAG architecture that facilitates the retrieval of information in the digital workplace using the Azure OpenAI environment in combination with other Azure services to expand its capabilities and obtain the maximum result.

After the release of the first projects, together with the customers, we monitored the impact of this new technology on their digital workplace and the results were extremely positive.

To learn more, here is the complete project.

Azure OpenAI RAG is a solution that integrates the Retrieval Augmented Generation technique within the Microsoft cloud ecosystem. It is an approach that combines the generative capabilities of advanced language models, such as GPT-4, with an information retrieval system, allowing for obtaining more accurate, updated and appropriate answers to the business context.

The RAG framework improves language model responses by adding information retrieved from external sources, such as internal documents or business databases. In this way, the answers are not based solely on what was learned during training, but are enriched with current and specific content, keeping relevance high and reducing the risk of errors.

Azure provides a secure, scalable environment that can be fully integrated with business tools such as SharePoint, Teams and OneDrive. The RAG implemented through Azure OpenAI allows data to be kept within the business perimeter, ensuring compliance with privacy regulations and perfect continuity with existing digital solutions.

The main difference lies in security. When using public ChatGPT, data passes into uncontrolled external environments. With Azure OpenAI, on the other hand, requests are managed directly in the customer's Microsoft cloud, which means that sensitive information never leaves the corporate infrastructure, ensuring greater control and compliance.

The RAG allows companies to obtain answers based on reliable and up-to-date sources, while maintaining control over the data used by the model. This approach reduces errors, improves the quality of responses, accelerates access to information and optimizes the use of digital resources already available in the company, without having to completely retrain the models.

The system receives a request, identifies the purpose and the relevant contents, interrogates a search index to retrieve the most relevant data, inserts this data into the prompt together with the user's message and sends everything to the model to generate the response. This process is manageable through Azure AI Studio or API and can be powered by text files, Office documents, PDFs, or content already present on BLOB storage or Azure AI Search indexes.

Azure OpenAI supports .txt, .md, .html, .pdf, Word, and PowerPoint files. The files can be uploaded manually, retrieved from a BLOB archive or from an existing search index. The quality of the answers depends on the clarity and textual structure of the uploaded content, especially when it comes to documents containing graphics or images.

No, it's not necessary. The strength of the RAG lies precisely in the possibility of providing the model with updated information without having to modify or retrain the entire LLM. This reduces costs, simplifies adoption, and allows greater flexibility in updating information sources.

Dev4Side has implemented a RAG solution that allows business users to query their document bases securely and precisely. The goal was to overcome the limitations of generalist AI, offering answers based on internal content, without sacrificing data protection. The project has led to a more efficient management of information and to a concrete improvement of the user experience in the digital workplace. More details here.

The Modern AI Apps team responds swiftly to IT needs where software development is the core component, including solutions that integrate artificial intelligence. The technical staff is trained specifically in delivering software projects based on Microsoft technology stacks and has expertise in managing both agile and long-term projects.